TRANSKRYPCJA VIDEO

Opis wygenerowany przez Skrybot

Dla tego filmu nie wygenerowano opisu.

Transkrypcja wygenerowana przez Skrybot

This is a number and this is 70,000 of them and today I'm going to be teaching an AI to recognize these handwritten numbers. In fact I'm going to train multiple models from incredibly simple models to very complex ones and at the end I'm going to test if any of my models can get 99% accuracy. We're going to train four models a super dumb one, a less dumb one, a pretty smart one and Alex Net. Each is going to be smarter than the previous and so it's going to learn the numbers better but we'll get to those in a sec.

So the way this is going to work is that we're going to feed the AI a bunch of images so it can learn the numbers. We're going to be using the MNIST data set which is a data set of 70,000 28 by 28 pixel grayscale images of handwritten digits. This data set is commonly used to test and train image recognition models and it's kind of like the first thing you do when you learn about neural networks. So I'm going to split the 70,000 images into two groups. A group of 60,000 images with which I'm going to train the model and a group of 10,000 images with which I'm going to test the model.

So I'm going to give each model the first group of images so it can study and then I'm going to quiz it on the other group. Let's start with a super dumb model. It would only have one layer. This is what's called a simple linear classifier. Okay so let's first download the data set and here we have it. As you can see each image comes with the correct label so what we can do is pass a random image through the model and see what it predicts. Then we can calculate how wrong the model was and adjust all the little parameters to do better and better each time. This is what's called backpropagation.

I'm not even going to attempt to explain how this works because first I'm not nearly qualified enough to do it and secondly there are a bunch of great youtube videos explaining this concept so I'll link some of them down below. And after you do this enough times the model gets better and better until it reaches a point where it stops improving and actually starts to get worse and worse. But anyway let's get back to the model. Okay so the super dumb model took a whopping 19 seconds to train and look at that it has an accuracy of 91. 29 percent. Holy that's pretty good.

So this means that for every 10 images it gets one wrong. So for example in this case it thought this was an 8 and it thought this was a 6 and to be honest I can see this being a difficult 5 to guess. However it also got wrong some really easy numbers like look at this 4 right here. Come on man that's that's an easy 4 but don't worry because that's when our next model comes in the less dumb model. For this I'm gonna max out the middle layers. It's gonna go from having only one neuron to having 256 and I'm gonna have two of those layers. Yeah baby let's go.

And remember we're trying to hit 99 accuracy so maybe we can even hit it with this model. Okay let's train it and after only 35. 6 seconds we have 92 accuracy. Okay okay that's 0. 96 percent improvement. I'll take that but not gonna lie I thought this would go much better. I still can't really understand why this isn't performing better and I even tried to train it for longer but it didn't work. I think it has to do with the number of weights and biases our model has but honestly I have no idea. I mean it's still a great accuracy but it's still getting really basic numbers wrong.

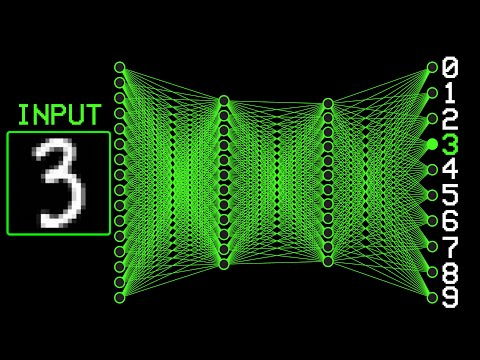

Let's move on to the next model the pretty smart one. This is going to be a CNN or in other words a convolutional neural network and here the structure of our network completely changes. So instead of just having a fully connected neural network now we also gonna have something called feature extraction. So before passing the image to our network we're going to extract the main features of that image and we do this through convolutions which are basically filters and different ways to analyze the image. So now instead of just passing 784 pixel values to our network we're calculating the main features of that image and then passing them to our model.

Again completely unqualified to be talking about this so I'll link some videos down below. And so the structure of the pretty smart model is this a feature extraction block that has convolutional layers and max pooling and also our good old linear classifier. Let's train it and see what happens. Okay 97% accuracy on this first training loop. 98 on the second one. This is going great we might actually hit 99. Oh oh 98. 5 98. 6. Whoa almost there and this only took 96 seconds to train and it got 98. 6 accuracy. That's pretty impressive.

Look at this beast it's getting almost every number right and the ones he's not getting are difficult to get. This five right here kind of looks like a z so that's why it got it wrong. And come on this zero right here hasn't even been fully drawn. These are all numbers I got from the MNIST dataset but I wanted to see if it could recognize numbers that I'd draw. So let's hop over to Photoshop and draw a little bit. I'm going to draw 20 numbers and the first 10 are going to be like really easy. These ones our model should get it easy peasy.

And then I'm going to draw 10 other images that I think should be a little bit harder. And here are the results. It looks like our model got every image right except the nine and I don't know in what world that's a three but okay I'll let that slip. Let's check out the hard images. Honestly not bad. It got every image right except this one. The model predicted a seven for this number and I get that it kind of looks like a seven and if we look at the probabilities from the model we can see that it's 76% sure that is a seven and five percent sure that it's a one.

So not too bad and now the moment we have all been waiting for. Alex net. This puppy has a total of five convolution layers, five revel activation function, max pooling and 8192 interconnected neurons. It's a beast. There's only one thing left. Train it and see if it has an accuracy of 99%. This is it and after exactly 10 minutes and 10 seconds we have 99. 21 accuracy. Whoo let's go. We did it. Just look at this. Alex net is classifying numbers left and right and getting every one of them correct. I mean just look at this confusion matrix.

This shows the predicted values against the true values so we can see how well our model is performing. And just look at this. It's getting almost everything right. Maybe the only problem it's having is that it's predicting some sevens as twos but besides that it's perfect. And just as a side note now that we have trained this model we can use it with other data sets. For example the fashion MNIST which is a data set just like MNIST but instead of numbers there's images of pieces of coding. I linked all the code I use for the model so you can play around and change anything you want.

It's on Google collab notebook so it runs on Google servers and your computer doesn't have to lift a finger. Also if you want to try this make sure to change the runtime settings to the GPU so it runs faster. Consider donating to my fundraiser. I'm trying to raise 10 000 dollars for diabetes by the end of 2023. Thank you so much for watching and I'll see you in my next video. .

S K R Y B O T

Skrybot. 2023, Transkrypcje video z YouTube

Informujemy, że odwiedzając lub korzystając z naszego serwisu, wyrażasz zgodę aby nasz serwis lub serwisy naszych partnerów używały plików cookies do przechowywania informacji w celu dostarczenie lepszych, szybszych i bezpieczniejszych usług oraz w celach marketingowych.